well, without even having looked at more than your screenshots and reading the words "genetic algorithm" I feel like saying, GREAT WORK... I remember the long talks between level designers and the CEO of radonlabs (my old workplace) about bots 'intelligence'.

Then again I remember talking with an old school friend who studied AI in Osnabrück Germany, who told me, there is no such thing as AI until now. Don't really know what to believe, anyway I know how potentially powerfull the stuff is you're playing with... keep it up please...

wouldn't that be something for irrAI, JP ?

EDIT: what you apply to be a valid goal in evolution history is strongly going to affect the outcome, so maybe turning speed shouldn't really be a valid goal. Judge them on collected food only maybe ? Well the most impressive example I ever saw, was the 2d top down vehicle 'drive around the block' thing... Small cars with an oldschool physx (lots of rotation inertia resulting in mass carambolage behaviour) trying to go around a square block. Only sensor being, collision with wall or other car and later developing raytest maybe. I think spawning with 20 - 30 each generation. First generation always hit the first wall, except for one or two which tried to go left or right, without even knowing if they went right or wrong way... second generation really all went the right way since the only way to judge was the one that actually by coincident was going the right way at first time.. third generation was already going around the second corner, fourth, fifth and sixth or so already made the way around the block once, going further, they learned to avoid each other and so on... after letting it run for 30 min. or so they were godlike and better than any human

supercars player that I've ever seen playing... perfect example probaly written by a professor... again... keep it up

EDIT2: everyone here, I guess, somehow takes pathfinding (like

Argorha pathfinding <- deserves more credit) for granted even though you can probably find a lot of very good concepts in the source if you search and try to undestand... maybe take a look ?

EDIT3: think darwin... it's the year...

EDIT4: looking at

supercars again, I feel like saying, and yes I'm drunk, when will they ever teach them humor ?

Like for example...

You can see it's lap 1 of 5, the race has just started, and the player (human) is motivated. While still adjusting to the new course (race track), the player is not performing to well. Up comes the car ramp (car jumping obstacle), the player does well, goes around a few more curves and reaches finish line.... So that was the first lap, naturally the player has now adjsuted quite good, he has seen the whole race course. Now short time memory still works and lap 2 goes quite fluently, confidence kicks in, and long time memory (RNS -> ZNS) begins to memorize. Lap 3 seems to be going well, when at about middle of the lap the player starts acting sloppy, hitting other cars and walls, there comes the car jumping obstacle again and the player somehow nearly crashes against the opposing wall, by hitting another car in mid-air, just about makes it, at same time saving the opponents cars life, which wouldn't have made the jump without the players aiding velocity... There comes motivation again the player tries to punish the undeserving dummy purposely by hitting the the opponent a few more times making it crash against a wall. Now there is an example of difficult maneuvering only motivated by revenge... a perfectly human ability... back to the beginning, I'll guess it will be hard to teach that kind of behaviour.... try giving them dopamin, serotonin and adrenalin...

EDIT5:

in other words, give them extra good rotation speed when 'feeling' rewarded, happy or scared

EDIT6:(after which I will watch 24S07, jack bauer saving the world)

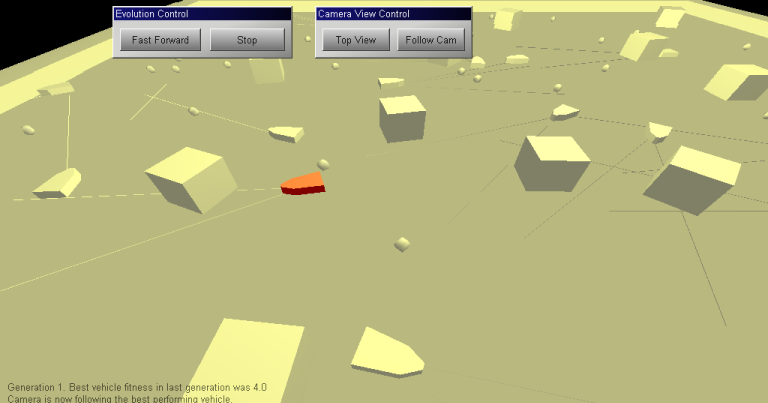

xDan wrote:and also there you should see the best fitness in each generation (so that should improve over time). generation is just the period of time they are left to run around the environment before they are killed and a new population created from the "fittest" vehicles (with crossover - freaky vehicular sex - and all that..)

Choose wisely on what you consider a fittnes and what not, try to avoid unintelligent going in circles. Think route planning... I would love the concept of reverse thrust to brake in time (more newton based movement) maybe think about centripetal force for sharp curves and failing like bugs which roll on their backs unable to roll back. Maybe integrate irrNewt car physics or generally irrPhysX.

astound us...