So I'm not sure if I should've started a new thread for this or not, as it's semi-related and came up in the search results.

But pretty much I reached out to the ffmpeg mailing list and to their subreddit, but neither has gotten a response in a few days so thought I'd try here.

I started an Irrlicht project so that I could use ffmpeg to record the screen, and then use Irrlicht to display the screen in real-time on a texture.

That's all working, but now I'm trying to save it to a file (like maybe in .mp4 format). Figured someone here might be a bit better with dealing with pixel formats and such than me.

I got the mp4 file to open and start writing. Here's the code that writes the actual screen pixels to that file:

Code: Select all

void OutputVideoPlayer::pushFrame(AVFrame* pFrame) {

int err;

if (!videoFrame) {

videoFrame = av_frame_alloc();

videoFrame->format = AV_PIX_FMT_YUV420P;

videoFrame->width = cctx->width;

videoFrame->height = cctx->height;

if ((err = av_frame_get_buffer(videoFrame, 32)) < 0) {

std::cout << "Failed to allocate picture" << err << std::endl;

return;

}

}

if (!swsCtx) {

swsCtx = sws_getContext(cctx->width, cctx->height, AV_PIX_FMT_RGB24, cctx->width,

cctx->height, AV_PIX_FMT_YUV420P, SWS_BICUBIC, 0, 0, 0);

}

// From RGB to YUV

sws_scale(swsCtx, (uint8_t const* const*)pFrame->data, pFrame->linesize, 0, cctx->height,

videoFrame->data, videoFrame->linesize);

//videoFrame->data[0] = pFrame->data[0];

videoFrame->pts = (1.0 / 30.0) * 90000 * (frameCounter++);

std::cout << videoFrame->pts << " " << cctx->time_base.num << " " <<

cctx->time_base.den << " " << frameCounter << std::endl;

if ((err = avcodec_send_frame(cctx, videoFrame)) < 0) {

std::cout << "Failed to send frame" << err << std::endl;

return;

}

AV_TIME_BASE;

AVPacket pkt;

av_init_packet(&pkt);

pkt.data = NULL;

pkt.size = 0;

pkt.flags |= AV_PKT_FLAG_KEY;

if (avcodec_receive_packet(cctx, &pkt) == 0) {

uint8_t* size = ((uint8_t*)pkt.data);

av_interleaved_write_frame(ofctx, &pkt);

av_packet_unref(&pkt);

if (--TEST <= 0)

{

finish();

free();

}

}

}

And here's how I'm updating an Irrlicht texture using the above function:

Code: Select all

/// Copies data from the decoded Frame to the irrlicht image

void writeVideoTexture(AVFrame *pFrame, IImage* imageRt) {

if (pFrame->data[0] == NULL) {

return;

}

int width = imageRt->getDimension().Width;

int height = imageRt->getDimension().Height;

//from big Indian to little indian if needed

int i, temp;

uint8_t* frameData = pFrame->data[0];

unsigned char* data = (unsigned char*)(imageRt->lock());

//Decoded format is R8G8B8

memcpy(data, frameData, width * height * 3);

imageRt->unlock();

}

int VideoPlayer::decodeFrameInternal() {

int ret = 0;

if (ret = av_read_frame(pFormatCtx, &packet) >= 0) {

// Is this a packet from the video stream?

if (packet.stream_index == videoStream) {

// Decode video frame

avcodec_send_packet(pCodecCtx, &packet);

while (ret >= 0) {

ret = avcodec_receive_frame(pCodecCtx, pFrame);

if (ret == AVERROR(EAGAIN))

{

ret = 0;

avcodec_send_packet(pCodecCtx, &packet);

break;

}

//pFormatCtx->duration

// Convert the image from its native format to RGB

sws_scale(sws_ctx, (uint8_t const* const*)pFrame->data,

pFrame->linesize, 0, pCodecCtx->height,

pFrameRGB->data, pFrameRGB->linesize);

writeVideoTexture(pFrameRGB, imageRt);

ovp.pushFrame(pFrame); // OR writeFrame(pFrameRGB), not really sure there's a difference other than conversion in pushFrame()

}

ret = 0;

}

// Free the packet that was allocated by av_read_frame

av_packet_unref(&packet);

}

return ret;

}

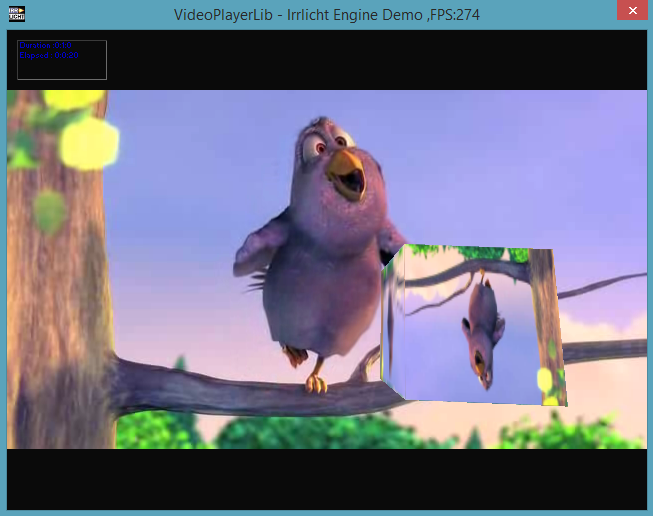

The best I can get it to display looks really bad, like this:

Any ideas on why this might be?

The full, compilable code and instructions to build it are here:

https://github.com/treytencarey/IrrlichtVideoPlayerLib/